How concerned are you about the information you get from voice assistants like Siri, Alexa, or Google? Have you ever thought about it before? Those questions and their answers are on the Ariam Mogos’ mind.

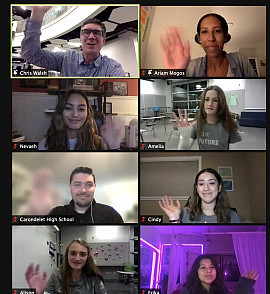

On March 18, Innovators@Carondelet invited Mogos, a Futurist Fellow at Stanford’s d.school, to join the Carondelet community via webinar and present on what stories our data can to tell and how those omitted could contribute to harm.

“It’s important to reflect and act on our own biases as creators,” Mogos said.

Artificial intelligence and technology are created by humans; thus, these same human biases can be embedded in virtual assistants meant to know all.

“There are all types of biases that do lots of harm to non-dominant groups,” said Mogos. She pointed out that if data sets are not diverse, it can actually cause harm to people of color, for example, by not properly making measurements on various skin tones.

Throughout her talk, Mogos presented hard questions and hope for the future of ethical AI tech.

Mogos points out that there are ways we can do better. “When we truly live amongst each other and engage, we can really learn about one another from a place of empathy and intention,” she said. “We have the right to decide, and we can choose what we want. But that means people have to participate.”

Her presentation was facilitated by Innovators@Carondelet who have brought engaging speakers presenting on fascinating topics in an ongoing speaker series since last year.

Miss the presentation? Mogos’ full presentation will be available here next week. A student took over our Twitter account and brought live updates and insight to the timeline.

Stay tuned for information on the next Speaker Series installation from Innovators@Carondelet.